on

Introduction to Rate Limiting

Have you ever wondered how some websites protect themselves from DDoS attacks or why we get status code HTTP 429 in response to calling some API (Ex: GitHubAPI) too many times. The solution behind them is Rate limiting.

What is Rate Limiting?

Rate limiting is used to control the amount of incoming traffic to a service or an API. For example, an API limits 100 requests per user in an hour using a Rate Limiter. If you send more than 100 requests then you will recieve TooManyRequests error. This way it controls incoming traffic as well as mitigates the DDoS attacks.

Types of Rate Limiting

- Server level Rate Limiting

- Server level Rate Limiting can be done using Nginx server.

- By using Nginx server for running our service, we can control the traffic based on incoming URL or IP address.

- You can learn more about it here.

- API level Rate Limiting

- API level Rate Limiting can be achieved by writing a middleware on top of API end points.

- Every request will be inspected by middleware and it will decide whether the request should be processed or rejected.

- If the request is rejected by middleware, API sends response with status code HTTP 429.

In this post, we will be discussing more about API level Rate limiting using GoLang.

API level Rate Limiting

Let’s understand API level Rate Limiting by implementing simple Rate Limiter which accepts one request per sec as middleware on an API end point.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

// main.go

package main

import (

"fmt"

"net/http"

)

func main() {

// Create New HTTP multiplexer

mux := http.NewServeMux()

// Wrap helloHandler with middleware(ratelimit)

mux.HandleFunc("/hello", ratelimit(helloHandler))

fmt.Println("Server listening at 8888:...")

http.ListenAndServe(":8888", mux)

}

func helloHandler(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello World!\n"))

}

Above code snippet listens at end point localhost:8888/hello . We are limiting the number of incoming calls to the endpoint by calling ratelimit method before calling helloHandler. This ratelimit method decides whether the incoming request should be processed or rejected.

Let’s implement the ratelimit using package x/time/rate. This package internally implements Token bucket algorithm which we will discuss in the next section.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

// RateLimiter.go

package main

import (

"net/http"

"golang.org/x/time/rate"

)

var limiter *rate.Limiter = rate.NewLimiter(1, 1)

func ratelimit(next http.HandlerFunc) http.HandlerFunc {

return func(w http.ResponseWriter, r *http.Request) {

// Checking whether request should be processed or not

if !limiter.Allow() {

http.Error(w, "Limit on Number requests has Exceeded", http.StatusTooManyRequests)

return

}

next.ServeHTTP(w, r)

}

}

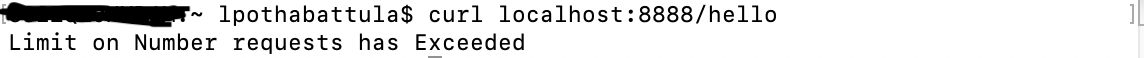

At line 8, we first created a global instance of Limiter by calling NewLimiter with rate and burst equal to one. The ratelimit method accepts helloHandler as parameter and returns a new http.HandlerFunc. At line 12, new handler checks whether the request should be processed or not. If not, it will respond with status code http.StatusTooManyRequests else it proceeds.

For example, i ran the above code locally and sent two requests concurrently. One of them failed with the below error which is what expected

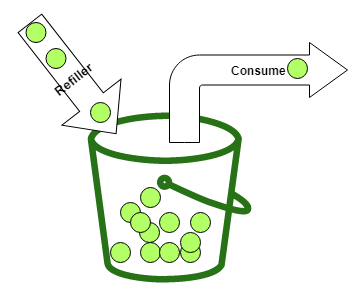

How Token Bucket Algorithm works?

- Let’s define a struct

TokenBucketwithRate,BurstandAvailableas parameters.

1

2

3

4

5

6

7

8

9

10

// Burst is the maximum number of tokens can be present in bucket

// Rate is the number of tokens to be added per sec to the bucket

// Available is the number of tokens currently available in the bucket

type TokenBucket struct {

Rate int

Burst int

Available int

mu sync.RWMutex

}

Fillmethod fills the bucket withRatetokens every second

1

2

3

4

5

6

7

8

9

func (tokenBucket *TokenBucket) Fill() {

ticker := time.NewTicker(time.Second)

for range ticker.C {

tokenBucket.mu.Lock()

defer tokenBucket.mu.Unlock()

tokenBucket.Available = max(tokenBucket.Burst, tokenBucket.Available+tokenBucket.Rate)

}

}

Takemethod reduces the available tokens by one and returns true if there are any available tokens

1

2

3

4

5

6

7

8

9

10

func (tokenBucket *TokenBucket) Take() bool {

tokenBucket.mu.Lock()

defer tokenBucket.mu.Unlock()

if tokenBucket.Available > 0 {

tokenBucket.Available--

return true

}

return false

}

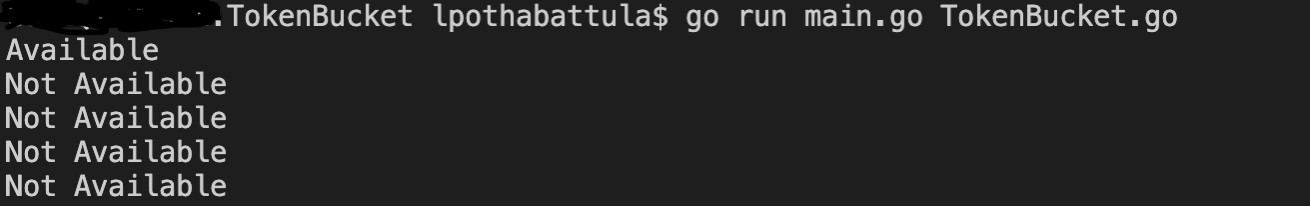

- In the below code snippet, at

line 7TokenBucket is created with burst and rate equal to 1.line 9toline 19spawns five Go Routines and each tries to access the token.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

package main

import (

"fmt"

"sync"

)

func main() {

tokenBucket := NewTokenBucket(1, 1)

var wg sync.WaitGroup

for i := 0; i < 5; i++ {

wg.Add(1)

go func(wg *sync.WaitGroup) {

defer wg.Done()

if tokenBucket.Take() {

fmt.Println("Available")

} else {

fmt.Println("Not Available")

}

}(&wg)

}

wg.Wait()

}

Result of running the above code can be seen in the below image. First Go Routine was able to access the token and remaining Go Routines failed to access because only one token was available at that second.

Complete implementation for Token Bucket Algorithm is available here

More Usecases

- We have discussed the usecase which limits number of requests globally. What if we want to limit number of requests per user?

- Hint: In-Memory Cache and Invalidating the old entries

- How would you scale the above usecase When there are millions of users?

- Hint: External cache like Redis or Memcached

In conclusion, Rate Limiting helps by securing the APIs from DDoS attacks and also controls incoming traffic.